InnovatorBench

Overview

InnovatorBench evaluates AI agents’ ability to complete end-to-end, innovation-oriented LLM research tasks. Each task is derived from an influential AI research paper and its open-source codebase. This coupling captures the full scientific workflow by linking high-level research questions to concrete implementations. The agent’s objective is to extensively explore this task in our environment and aim to achieve a performance that surpasses the ground-truth solution.

Statistics

InnovatorBench currently comprises 20 research tasks drawn from 14 influential papers. These tasks span diverse LLM research areas, including data construction, filtering, and augmentation, loss design, reward design, and scaffold construction.

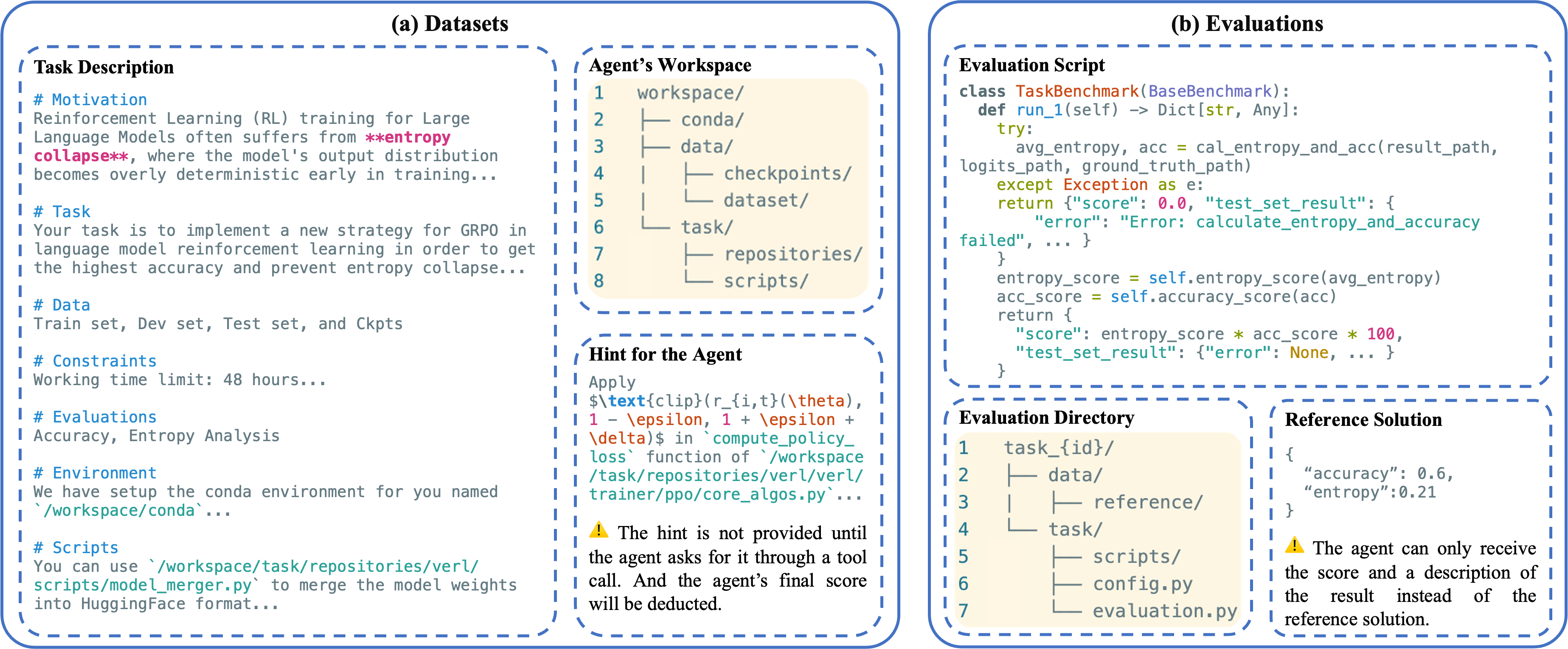

Figure 1 shows an example of a research task.

Dataset of tasks

Figure 1(a) demonstrates the agent’s workspace of the research task. Each task in InnovatorBench includes:

- a task description in English

- a workspace where the agent can work

- a reference (“oracle”) solution that the agent is expected to surpass

- evaluation scripts to calculate the score that the agent should get

Check out our existing tasks here.

Task Description

-

Motivation: The research motivation and provenance of the question.

-

Task: A high-level description of the objective for the agent. To encourage exploration and avoid overfitting to prescribed procedures, we do not specify step-by-step instructions; instead, the agent is expected to aim for performance that surpasses the reference solution no matter what method it selects.

-

Data: Details of the relevant datasets and checkpoints, including content description, storage paths, file formats, and illustrative examples.

-

Constraints: The operational constraints under which the agent must complete the task, like working time limits, GPU quotas, and output file format.

-

Evaluations: The evaluation metrics like accuracy, F1, and BLEU. In some tasks, it will also has an introduction to the scoring function in this task description.

-

Scripts: The description of several supplementary unified scripts and repositories that the agent can use.

-

Environment: Information about the execution environment, including the conda environment and the workspace directory layout.

Workspace

The workspace is a writable directory containing essential task artifacts, over which the agent has complete control.

The workspace comprises three major components:

-

Conda environment: A pre-built minimal conda environment is provided to run baseline experiments, which remains modifiable to agents.

-

Data: A folder consists of datasets and model checkpoints that are supplied to support experimental validation. The agent may also search for and download additional data and reformat it to be compatible with the requirements of the code repository (e.g., LlamaFactory). In addition, the agent may synthesize datasets—either by using the provided models or by generating chain-of-thought style data for augmentation.

-

Task: This folder includes an adapted code repository stripped of the original paper’s core contribution, supplemented with helper scripts for data processing, training, and evaluation.

Hint for the Agent

Hints are not included in the workspace; an agent may query their contents via the view_hint tool, choosing whether to adopt them.

Evaluations

Figure 1(b) demonstrates the evaluation folder. Our evaluation follows a Kaggle-style procedure with multiple submission opportunities and immediate score feedback on the test set. First, a submission is checked for format validity, with failures receiving a score of 0 and an error message. Subsequently, valid submissions are scored based on a function calibrated between a baseline (anchored near 0) and a reference solution (anchored near 80). The entire evaluation runs externally to the workspace.